The Compliance Arms Race Part 2: Why Traditional AI Failed Compliance Teams

In Part 2 of our series, The Compliance Arms Race, we discuss how traditional black box AI solutions have failed compliance teams and why agentic AI is superior.

AI has reshaped industries from logistics to healthcare, but in AML and financial compliance, it hasn’t delivered on its early promise.

For Chief Compliance Officers (CCOs), the allure of automation came with justifiable caution. When the cost of error includes regulatory fines and reputational damage, blind trust in black-box systems is a nonstarter.

Early use cases promised enhanced efficiency, in areas such as:

- Sanctions and watchlist screening

- PEP and adverse media checks

- Case triage in KYC/KYB onboarding

The hope was that analysts could spend less time on repetitive tasks like manual name-matching and false positive resolution, and more on investigating complex, high-risk cases.

The Starling Bank Case

But those promises quickly unraveled. Take Starling Bank: between 2017 and 2023, the UK neobank’s automated screening system failed to properly check customers against global sanctions lists. Even after agreeing with the UK’s Financial Conduct Authority (FCA) to halt high-risk onboarding, the bank opened over 54,000 new accounts for flagged customers.

The result? A £28.9 million fine and a public reprimand.

The problem wasn’t AI itself, but rather the wrong kind of AI. Traditional models lacked the domain-specific workflows and context-driven checks needed for AML compliance.

The Reality Check: Where Traditional AI Broke Down

While early AI tools were meant to streamline the compliance process, they ended up introducing some new risks, instead.

False Positives and False Negatives

For compliance leaders, one of the major flaws of traditional AI is inaccurate matching. The system generating incorrect or misleading outputs. For instance, an AI system designed for name screening against the OFAC Specially Designated Nationals (SDN) List might flag a false positive match between a legitimate customer named “John Doe” and a sanctioned individual also named “John Doe.” Even though their birthdates, nationalities, and known aliases are entirely different.

This could lead to a false positive alert. Triggering an unnecessary manual review by an analyst, delaying the customer's transaction, and wasting valuable compliance resources.

Conversely, and far more dangerously, an inaccurate match could also result in a false negative. Imagine an AI system failing to flag a truly sanctioned entity, “Alpha Corp (Pvt) Ltd.” All because its model, instead of accurately identifying the complete name and associated identifiers, misclassified it as a close but unrelated match to an unsanctioned “Alpha Corporation Inc.”

Without context-aware, multi-attribute matching, a high-risk transaction could slip through unchecked. Leading to AML breaches, regulatory penalties, and potential reputational damage.

Black Box Decisions

As a CCO, you’re not just responsible for outcomes. You’re accountable for how those outcomes were reached.

With traditional AI, that’s where things can get murky. Many machine learning and deep learning models make risk decisions without offering any insight into why. No audit trail showing how sanctions, PEP, or adverse media checks were evaluated. No rationale. Just a probability score or binary yes/no conclusion.

Imagine your team reviewing an onboarding case where the AI clears a politically exposed person as low-risk. Later, it emerges (through adverse media) that the person is linked to sanctioned entities.

When regulators ask why the system approved the case, if your only answer is “That’s what the model predicted,” it won’t hold up. Not with the FCA, FinCEN, or any internal audit committee worth its salt.

What’s needed is an AI system that not only performs the checks but documents the decision logic. Linking every approval or escalation to verifiable data points across sanctions lists, corporate registries, and media sources.

Audit Trail Gaps

Even when traditional AI systems get the decision right, they often fail to preserve the detailed, regulator-ready audit trail AML teams depend on. And for a CCO, that can make all the difference.

Let’s say your institution blocks a transaction linked to a politically exposed person (PEP) during sanctions screening. But when regulators or internal auditors ask for documentation (what screening lists were used, what rules or thresholds were applied, what contextual factors were considered) your system can’t produce the evidence.

- No timestamps.

- No decision logic.

- No lineage tracing how the alert was generated, reviewed, and resolved.

Now, even your compliant decision appears questionable.

This can turn an audit into a painful, time-consuming exercise in reverse engineering. Regulators may flag you for inadequate controls. Not because your risk judgment was wrong, but because you couldn’t show your work.

Let’s say that during a routine AML audit, regulators ask your team for documentation on 30 randomly selected blocked transactions. Your AI system may have flagged all of them correctly. But if it failed to log the sanctions, watchlist, and adverse media checks that led to the alerts, a regulator could still issue a warning for “insufficient documentation to support automated risk determinations.”

In compliance, trust isn’t just about the outcome. It’s about traceability across every KYC/KYB and transaction-screening step.

If you can’t walk regulators through every step of your system’s decision – inputs, logic, outcome, and human overrides – you’re not audit-ready. You’re exposed.

Alert Overload

AI promised to reduce the burden on compliance teams, but it often did the opposite. Flooding analysts with thousands of alerts, most of which turned out to be false positives.

The problem?

Older or simpler AI/Rule-Based systems often lack context and prioritization logic. They flag transactions based on surface-level matches, such as names, keywords, or outdated thresholds. Without factoring in critical differentiators like customer type, jurisdiction, historical transaction patterns, or previous screening results.

Analysts are forced to sift through massive volumes of irrelevant alerts with no clear way to tell which ones actually warrant investigation.

Let’s say an institution processes millions of transactions weekly. The AI flags 5,000 of them as potential sanctions or PEP matches based on name similarity to global watchlists. But 98% are false positives:

- Duplicate alerts

- Outdated list entries

- Benign entities with common names like “Mohamed Ali” or “Maria Gonzalez.”

There’s no risk ranking. No contextual score. Just a flat queue of alerts, all demanding the same level of manual review.

Your analysts spend hours triaging low-risk hits, while truly suspicious activity slips through the cracks.

Fatigue sets in. So does human error.

And when auditors ask why a high-risk transaction wasn’t escalated?

You’re left explaining how your team simply couldn’t get to it, buried under a mountain of AI-generated noise.

In AML, where timely detection and escalation are critical, alert overload isn’t just a nuisance. It’s a structural weakness. What’s needed are smarter alerts, rather than just more of them. Filtered, ranked, and contextualized by systems that understand risk in the same layered, nuanced way your best analysts do.

The Compliance-First Shift: What CCOs Actually Need

Most AI systems weren’t built with compliance in mind. But compliance isn’t just another enterprise use case. It’s a high-stakes domain with unique demands, such as:

- Auditability: Every decision must be traceable.

- Policy alignment: Systems must follow institution-specific rules.

- Escalation logic: Alerts must triage correctly, not just trigger.

- Data lineage: Inputs, logic, and outcomes need to be logged.

- Jurisdictional nuance: Risk looks different across geographies and regulators.

That’s where Agentic AI comes in. Unlike older black-box models, Parcha’s agentic systems are purpose-built for high-accountability environments like yours. They don’t just make predictions; they explain their conclusions step-by-step.

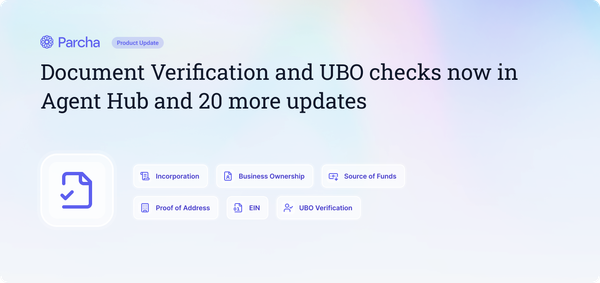

Agentic AI for Compliance

- Maintains full decision lineage for every AML/KYC/KYB workflow, including sanctions lists, PEP checks, adverse media sources, and applied rules

- Generates plain-English rationales for every decision, explaining why an alert was triggered or cleared

- Adapts to risk thresholds defined by your team, instead of relying on generic models

- Provides immutable, exportable audit logs for every review cycle

Compliance-Ready AI Checklist

When evaluating an AML-focused AI platform, you need to keep certain factors in mind:

- Ask: Can the system explain its decisions to a regulator?

- Check: Is there data lineage for every alert and outcome?

- Ensure: Thresholds are human-defined and override-able

- Verify: Audit logs are immutable and exportable

- Pilot: Run a limited deployment and pressure-test audit readiness

In short, compliance leaders don’t need to become data scientists. However, they do need to ask the right questions when choosing an AI tool or vendor. Because they need tools that’ll make AML operations faster, more accurate, and fully defensible under audit.

Click here to learn how compliance-first AI systems like Parcha are built to address these challenges and more.

This is part 2 of our series The Compliance Arms Race - you can read part 1 here: